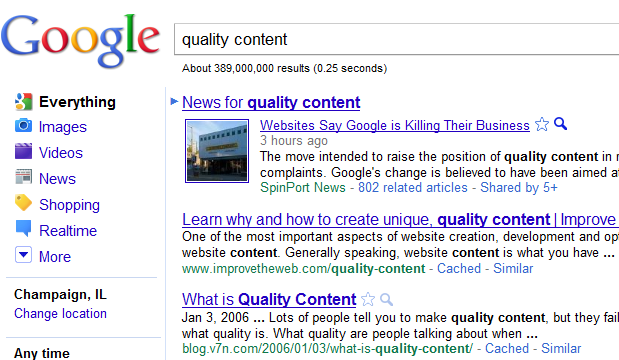

Google recently made a major change in its algorithms in order to improve the quality of search results. It affects about 12% of searches, making it much more significant than its frequent smaller tweaks.

It’s a timely development for people who are claiming that Google is losing the war against spam.

Google’s Amit Singhal and Matt Cutts announced that the update is intended to penalize “sites which are low-value add for users, copy content from other websites or sites that are just not very useful.” Conversely, it aims to reward sites with “information such as research, in-depth reports, thoughtful analysis and so on.”

The general consensus is that the move penalizes content farms, large websites that churn out huge volumes of content, often of low quality and written to attract maximum search engine hits and therefore revenue.

Many consider Demand Media, owner of eHow, to be the classic content farm, although they tell a different story. Interestingly, Demand Media’s properties actually seem to have benefitted from the update, while article marketing sites such as Ezinearticles have seen major losses in the rankings, prompting its CEO to make some big changes in editorial standards.

But How Can a Computer Assess “Quality?”

The most interesting aspect of the algorithm change is the fact that a computer is trying to evaluate a very subjective concept: how “good” is a particular piece of writing.

Stopping sites that “copy content from other websites” is simple enough: Google goes after scrapers and autoblogs and makes sure they don’t come up for search queries.

But assessing the quality of the content and the thoughtfulness of the analysis sure seems like something that would need to be done by a human.

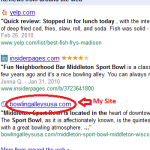

Although Google tightly guards the secrets of its algorithms, it’s clear they can do some amazing things. Overall, they rely heavily on inbound links to measure authority, but this update is more concerned with on-page factors.

Google can look at the keyword density to see if a site is overusing keywords in an attempt to manipulate the rankings. It also might take into account the time users spend on the page and the bounce rate (% of visitors who leave the site instead of viewing more pages). The algorithm can also evaluate outgoing links to other sites to see if it appears the writer is backing up his or her writing with authoritative citations.

While none of these factors by itself can assess a website’s quality, just the right combination of thousands of them might be able to get the job done.

But ironically, Google actually seems to recognize the need to have human intervention, which seems to go against their mission. First, they have revealed that employees change index ratings in order to improve search quality.

Also, as I mentioned in my post about the problem with online tutorials, Google just unveiled a new Chrome extension that lets surfers block sites from your own search results that you deem to be low-quality. It will send the information to Google who can make changes based on user behavior. (This data was not used in the recent update, however.)

What do you think about all of this?

I think this new update shows that how Google is “judging quality”, here, is the amount of duplicate content a website hosts. The article directories that got hit host loads of duplicate content – loads and loads of it. Algorithmically, that’s easy to detect.

EHow, on the other hand, has “bad” content – but most of it IS unique, even though it hasn’t always been that way. How can Google decide what’s “bad” in this case as opposed to what’s “good”, past the part where the domain hosts tons of duplicative content? Hard to say – they do have filters for reading levels now – which may be a first step towards identifying porous writing as opposed to good writing.

Thanks for the post, Andrew – nice summary!

Thanks Ross!

Now that you mention it I think I do remember hearing about the reading level filters, but I don’t know much about them. That’s a pretty interesting idea and I’m curious to see how it develops.

Duplicate content definitely is a big measure. From what I’ve read, there seems to be some grumblings about how the update has handled it. Lots of webmasters are complaining that they “unjustly” got penalized in the rankings while sites that scraped their content are now above them. (Some discussion on Webmasterworld)

I guess we’ll have to see how Google continues to tweak things.

Hey Andrew,

I started out writing a comment to your article with my thoughts on the update, but ended up with 600+ words…

So I posted it on my blog and linked back to you.

Thanks, Bryan. And nice post!

There is clearly two sides of the fence here. The companies that got effected are clearly mad at the fact that they have to suffer because Google is profiling their content to be “low quality”. I can see how that would be a low blow, but at the same time, those same websites follow no niche whatsoever. I don’t have any doubt in my mind that a lot of content on these “farms” was taken from a low-ranking non-traffic generating website and used for their benefit. Plus, the whole “revenue” word puts a bad taste in my mouth; how they prioritize that above anything.

I support Google’s change and I hope it lets original content get higher on the rankings without being scraped by these farm sites.

Hey Nicholas,

Yeah, I’ve seen a lot of forum posts and blog comments from very upset webmasters who think they’re being unfairly punished. Most of the time, though, their arguments don’t really stand up to scrutiny and it looks like they’re just venting because they know their whole business model of mass cheap content is being destroyed.

Really good SEOMoz article that theorizes in detail the likely causes for the ranking changes:

http://www.seomoz.org/blog/googles-farmer-update-analysis-of-winners-vs-losers

In my opinion all of Googles recent updates, esp. Panda have really missed the mark. Quality aged domains are getting hit or de-indexed for no reason. Take ezine articles for example. oops….